INSUBCONTINENT EXCLUSIVE:

Google today announced one of the biggest updates to its search algorithm in recent years

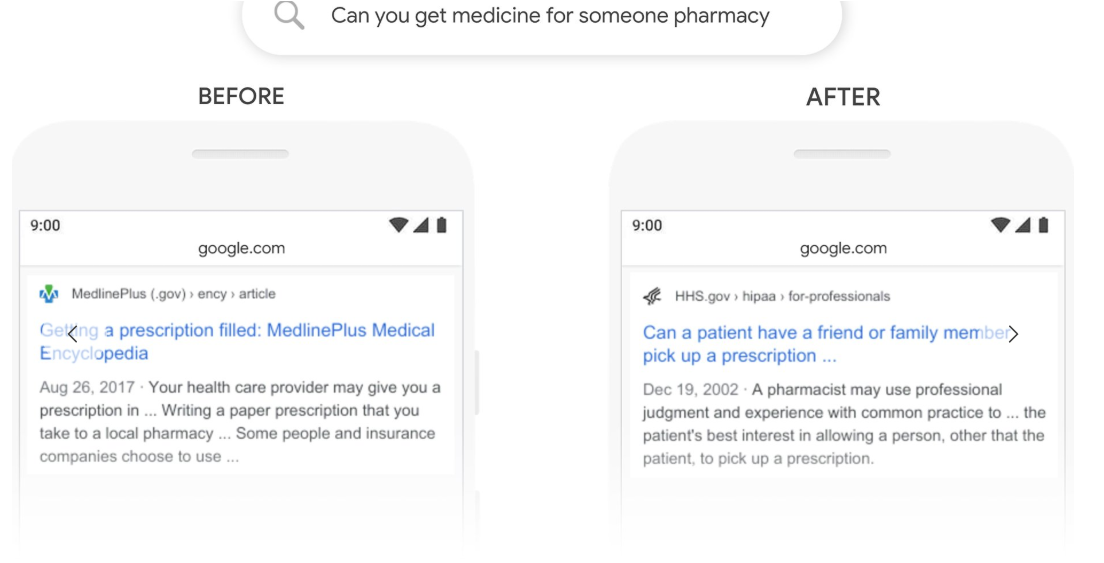

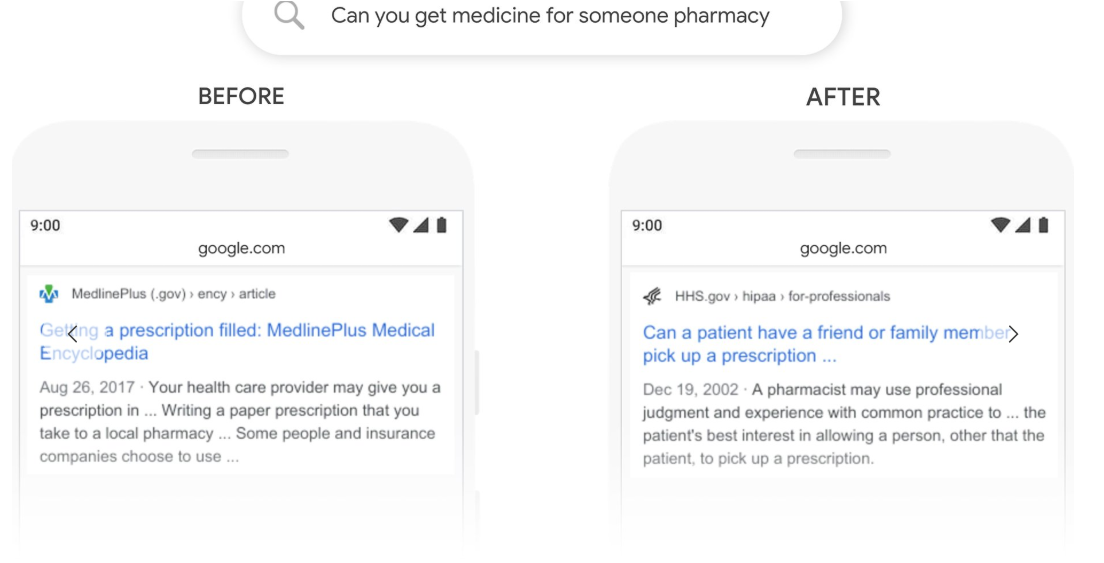

By using new neural networking techniques to better understand the intentions behind queries, Google says it can now offer more relevant

results for about one in 10 searches in the U.S

in English (with support for other languages and locales coming later)

For featured snippets, the update is already live globally.

In the world of search updates, where algorithm changes are often far more

subtle, an update that affects 10% of searches is a pretty big deal (and will surely keep the world SEO experts up at night).

Google notes

that this update will work best for longer, more conversational queries — and in many ways, that how Google would really like you to

search these days, because it easier to interpret a full sentence than a sequence of keywords.

The technology behind this new neural

network is called &Bidirectional Encoder Representations from Transformers,& or BERT

Google first talked about BERT last year and open-sourced the code for its implementation and pre-trained models

Transformers are one of the more recent developments in machine learning

They work especially well for data where the sequence of elements is important, which obviously makes them a useful tool for working with

natural language and, hence, search queries.

This BERT update also marks the first time Google is using its latest Tensor Processing Unit

(TPU) chips to serve search results.

Ideally, this means that Google Search is now better able to understand exactly what you are looking

for and provide more relevant search results and featured snippets

The update started rolling out this week, so chances are you are already seeing some of its effects in your search results.