Technology

Ah, Black Friday. The day of a zillion &deals& — some good, many bad, most just meant to clear the shelves for next yearmodels.

Hidden amidst ten thousand &LOWEST PRICE EVER! ONE DAY ONLY!& e-mails, though, are a handful of solid sales on legitimately good stuff.

Whether you&re trying to save some coin heading into Christmas or you just want to beef up your own gear collection, we&ve picked a few things that seemed worthwhile while trying to sift out most of the junk. We&ll add new deals throughout the day as we hear about them.

(Pro tip: Want to check if something on Amazon is actually on sale, or if a seller just tinkered with the price ahead of time to make it look like you&re getting a discount? Check a historical price checker like camelcamelcamel to see the price over time.)

Amazon Devices

Amazon generally slashes prices on its own devices to get the Black Friday train moving, and this year is no different.

- The 4K Fire TV Stick, usually $50, is down to $25

- The non-4K Fire TV Stick, usually $40, is down to $20. For the $5 difference, though, I&d go with the 4K model above. Future-proofing!

- The incredibly good Kindle Oasis is about 30% off this week — $175 for the 8GB model (usually $249), or $199 for the 32GB model (usually $279)

- If you&ve got Alexa devices around your house and are looking to expand, the current generation Echo Dot is down to $22 (usually $49) while the bigger, badder Echo Plus is down to $99 (usually $150)

Google Devices

![]()

- Googlelatest flagship Android phone, the very, very good Pixel 4, is $200 off at $599 (usually $799) for an unlocked model. The heftier Pixel 4 XL, meanwhile, is down to $699 from $899.

- The less current but still solid Pixel 3a is down to $299 (usually $399).

- The Nest Mini (formerly known as Google Home Mini) is down to $30 from its usual price of $49.

- The Nest Protect smoke alarm (both the wired and battery versions) are down to $99 (usually $119)

- The 4K-ready Chromecast Ultra is down to $49(usually $69), while the non-4K Chromecast is currently $25 (usually $35.)

Roku

With both Amazon and Google slashing at the prices for their streaming devices, Roku isn&t looking to be left out. The company4K-friendly Roku Ultra is down to $48 (usually $100), complete with a pair of JBL headphones you can plug into the remote for almost-wireless listening.

Xbox, Playstation, and Nintendo Switch

If you&ve yet to pick up any of this generationconsoles, now honestly isn&t a terrible time (as long as you can do it at a discount.) Both Microsoft and Sony are prepping to launch new consoles in 2020, but that means you&ve got years and years of really great games from this generation to pluck through — and it&ll probably be a few months before theremuch worthwhile/exclusive on the new consoles, anyway. Nintendo, meanwhile, just revised the Switch to significantly improve its battery life in August.

Microsoft has dumped the price on the 1 terabyte Xbox One X down to $349 (usually $499), including your choice of Gears of War 5, NBA 2K20, or the pretty much brand new Star Wars Jedi: Fallen Order. The Xbox One S meanwhile, is down to $149 (usually $249) with copies of Minecraft, Sea of Thieves, and about $20 worth of Fortnite Vbucks. (Be aware that the One S has no disc drive, so anything you play on that one must be a digital/downloaded copy. Thatnot a huge issue! But be aware of it, particularly if you&ve got a slower internet connection or limited monthly bandwidth.)

Likewise, Sony has a killer deal on the Playstation 4 — $199 gets you a 1TB PS4 and copies of God of War, The Last Of Us (Remastered), and Horizon Zero Dawn. The deal is available at most of the big box retailers (Best Buy/Walmart/GameStop/Target/etc), though it seems to be going in-and-out of stock everywhere so you might have to poke around a bit.

Deals on the Switch console itself are few and far between so far (and many of the deals are for the older model with the weaker battery), but you can pick up a pair of Joy-Con controllers for $60 versus the usual $80.

Apple

Apple deals don&t tend to get too wild on Black Friday — especially not on the latest generation hardware. This year, though, theresome surprisingly worthwhile stuff.

- The just-release AirPods Pro are down to $234 (usually $249). Thatonly $15 off, but hey, ita discount!

- Costco is selling the 40mm Series 5 Apple Watch in Space Gray for $355 — down from Costcousual price of $385. The 44mm model is going for $385, down from $415.

- The latest-gen 128GB 10.2″ iPad is down to $329 (usually $429), while the 32GB model is down to $249 (usually $329). So basically you get the 128GB model for what the 32GB version usually costs.

Sonos

Looking to expand your Sonos setup? Many things in the companyline-up are on sale right now, including:

- The Sonos Beam (the smaller of the companytwo sound bars), usually $399, is down to $299.

- The bigger soundbar, the Sonos Playbar, is down from $699 to $529

- The massive Playbase (like a soundbar, except you sit your entire TV on it) is down from $699 to $559.

- A two-pack of Play:1 speakers is going for $230 on Costco.com (usually $170-200 each), though you&ll need to be a Costco member to access it.

Ridiculously cheap microSD cards

The cost of microSD cards has plummeted over the last year, seemingly bottoming out for Black Friday. SanDisk512GB microSD card was going for $100-$150 just a few months ago; today itdown to $64. Need a faster model? The 512GB Extreme MicroSDXC was $200 earlier this year, and now itdown to $80.

Steam games

Valveannual Autumn Steam sale is underway, slashing prices on a bunch of top notch games — like Grand Theft Auto 5 for $15 (usually $30), Portal 2 for a buck, The Witness for $20 (usually $40), Return of Obra Dinn for $16 (usually $20), Soul Calibur 6 for $18 (usually $60), or the just released (and absurdly fun) Jackbox Party Pack 6 for $23 (usually $30).

Oh! And ValveSteam controller is down to $5 (from $50)… with the caveat that itbecause they&re discontinuing it and honestly for most games itjust an okay controller.

- Details

- Category: Technology

Read more: Gift Guide: Black Friday tech deals that are actually worth considering

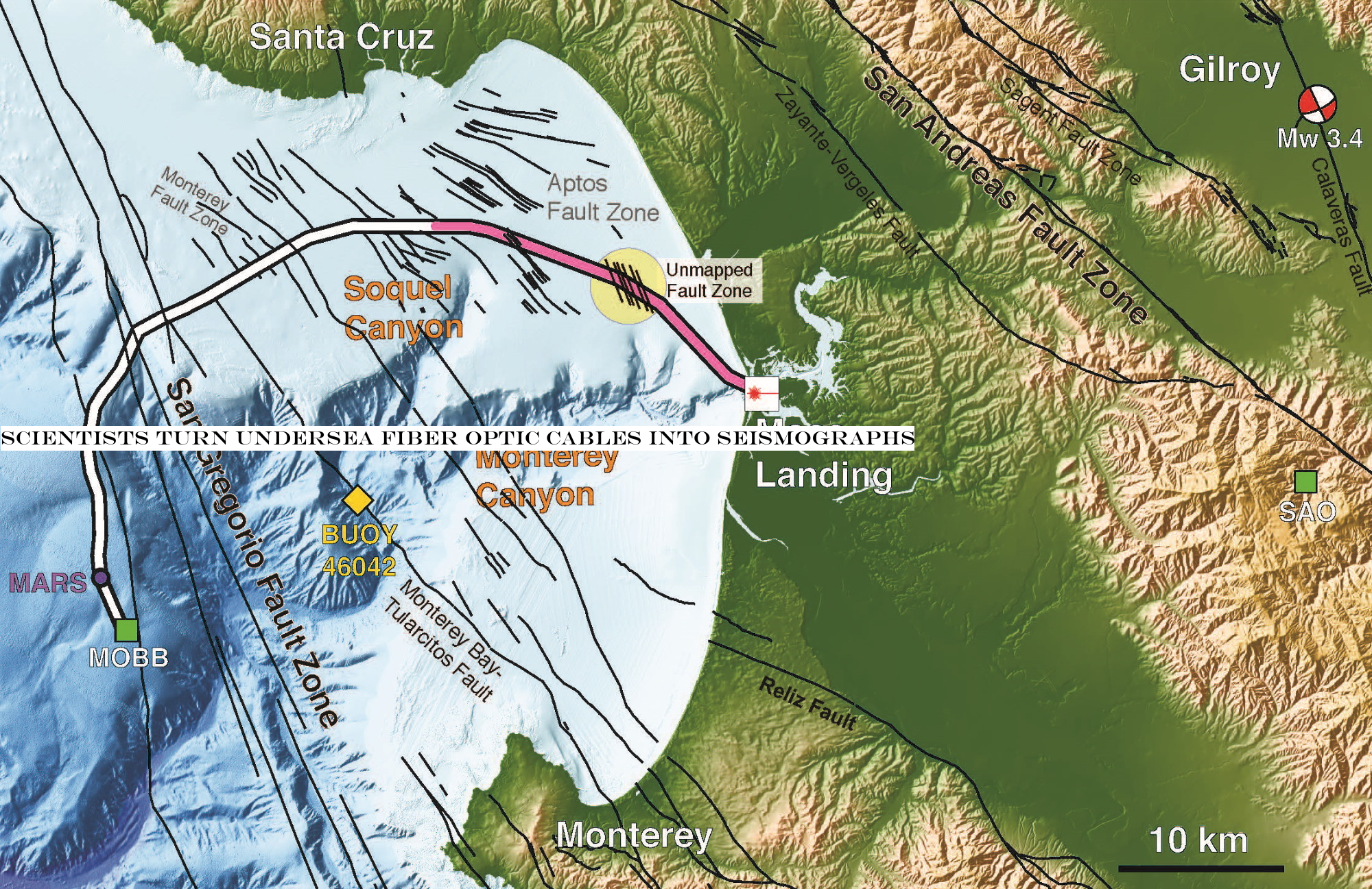

Write comment (91 Comments)Monitoring seismic activity all over the world is an important task, but one that requires equipment to be at the site itmeasuring — difficult in the middle of the ocean. But new research from Berkeley could turn existing undersea fiber optic cables into a network of seismographs, creating an unprecedented global view of the Earthtectonic movements.

Seismologists get almost all their data from instruments on land, which means most of our knowledge about seismic activity is limited to a third of the planetsurface. We don&t even know where all the faults are since therebeen no exhaustive study or long-term monitoring of the ocean floor.

&There is a huge need for seafloor seismology,& explained lead study author Nathaniel Lindsey in a Berkeley news release. &Any instrumentation you get out into the ocean, even if it is only for the first 50 kilometers from shore, will be very useful.&

Of course, the reason we haven&t done so is because itvery hard to place, maintain, and access the precision instruments required for long-term seismic work underwater. But what if there were instruments already out there just waiting for us to take advantage of them? Thatthe idea Lindsey and his colleagues are pursuing with regard to undersea fiber optic cables.

These cables carry data over long distances, sometimes as part of the internetbackbones, and sometimes as part of private networks. But one thing they all have in common is that they use light to do so — light that gets scattered and distorted if the cable shifts or changes orientation.

By carefully monitoring this &backscatter& phenomenon it can be seen exactly where the cable bends and to what extent — sometimes to within a few nanometers. That means that researchers can observe a cable to find out the source of seismic activity with an extraordinary level of precision.

The technique is called Distributed Acoustic Sensing, and it essentially treats the cable as if it were a series of thousands of individual motion sensors. The cable the team tested on is 20 kilometers worth of of Monterey Bay Aquarium Research Instituteunderwater data infrastructure — which divided up into some ten thousand segments that can detect the slightest movement of the surface to which they&re attached.

&This is really a study on the frontier of seismology, the first time anyone has used offshore fiber-optic cables for looking at these types of oceanographic signals or for imaging fault structures,& said Berkeley National LabJonathan Ajo-Franklin.

After hooking up MBARIcable to the DAS system, the team collected a ton of verifiable information: movement from a 3.4-magnitude quake miles inland, maps of known but unmapped faults in the bay, and water movement patterns that also hint at seismic activity.

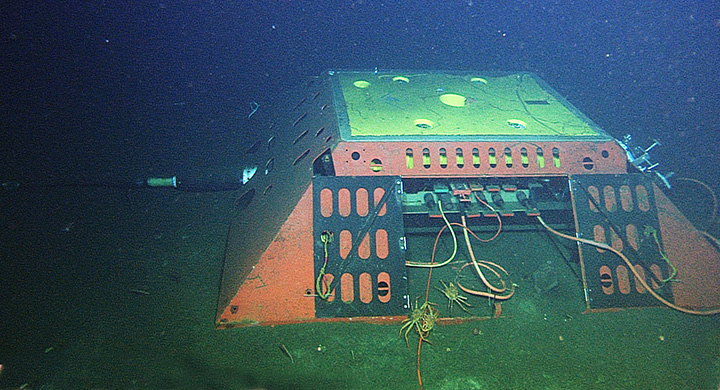

The main science node of the Monterey Accelerated Research System. Good luck keeping crabs out of there.

The best part, Lindsey said, is that you don&t even need to attach equipment or repeaters all along the length of the cable. &You just walk out to the site and connect the instrument to the end of the fiber,& he said.

Of course most major undersea cables don&t just have a big exposed end for random researchers to connect to. And the signals that the technology uses to measure backscatter could conceivably interfere with others, though of course there is work underway to test that and prevent it if possible.

If successful the larger active cables could be pressed into service as research instruments, and could help illuminate the blind spot that seismologists have as far as the activity and features of the ocean floor. The teamwork is published today in the journal Science.

- Details

- Category: Technology

Read more: Scientists turn undersea fiber optic cables into seismographs

Write comment (100 Comments)In June, TechCrunch Ethicist in Residence Greg M. Epstein attended EmTech Next, a conference organized by the MIT Technology Review. The conference, which took place at MITfamous Media Lab, examined how AI and robotics are changing the future of work.

Gregessay, Will the Future of Work Be Ethical? reflects on his experiences at the conference, which produced what he calls &a religious crisis, despite the fact that I am not just a confirmed atheist but a professional one as well.& In it, Greg explores themes of inequality, inclusion and what it means to work in technology ethically, within a capitalist system and market economy.

Accompanying the story for Extra Crunch are a series of in-depth interviews Greg conducted around the conference, with scholars, journalists, founders and attendees.

Below, Greg speaks to two founders of innovative startups whose work provoked much discussion at the EmTech Next conference. Moxi, the robot assistant created by Andrea Thomasz of Diligent Robotics and her team, was a constant presence in the Media Lab reception hall immediately outside the auditorium in which all the main talks took place. And Prayag Narula of LeadGenius was featured, alongside leading tech anthropologist Mary Gray, in a panel on &Ghost Work& that sparked intense discussion throughout the conference and beyond.

Andrea Thomaz is the Co-Founder and CEO of Diligent Robotics. Image via MIT Technology Review

Could you give a sketch of your background?

Andrea Thomaz: I was always doing math and science, and did electrical engineering as an Undergrad at UT Austin. Then I came to MIT to do my PhD. It really wasn&t until grad school that I started doing robotics. I went to grad school interested in doing AI and was starting to get interested in this new machine learning that people were starting to talk about. In grad school, at the MIT Media Lab, Cynthia Breazeal was my advisor, and thatwhere I fell in love with social robots and making robots that people want to be around and are also useful.

Say more about your journey at the Media Lab?

My statement of purpose for the Media Lab, in 1999, was that I thought that computers that were smarter would be easier to use. I thought AI was the solution to HCI [Human-computer Interaction]. So I came to the Media Lab because I thought that was the mecca of AI plus HCI.

It wasn&t until my second year as a student there that Cynthia finished her PhD with Rod Brooks and started at the Media Lab. And then I was like, &Oh wait a second. Thatwhat I&m talking about.&

Who is at the Media Lab now thatdoing interesting work for you?

For me, itkind of the same people. Patty Maes has kind of reinvented her group since those days and is doing fluid interfaces; I always really appreciate the kind of things they&re working on. And Cynthia, her work is still very seminal in the field.

So now, you&re a CEO and Founder?

CEO and Co-Founder of Diligent Robotics. I had twelve years in academia in between those. I finished my PhD, went and I was a professor at Georgia Tech in computing, teaching AI and robotics and I had a robotics lab there.

Then I got recruited away to UT Austin in electrical and computer engineering. Again, teaching AI and having a robotics lab. Then at the end of 2017, I had a PhD student who was graduating and also interested in commercialization, my Co-Founder and CTO Vivian Chu.

Lettalk about the purpose of the human/robot interaction. In the case of your company, the robotpurpose is to work alongside humans in a medical setting, who are doing work that is not necessarily going to be replaced by a robot like Moxi. How does that work exactly?

One of the reasons our first target market [is] hospitals is, thatan industry where they&re looking for ways to elevate their staff. They want their staff to be performing, &at the top of their license.& You hear hospital administrators talking about this because thererecord numbers of physician burnout, nurse burnout, and turnover.

They really are looking for ways to say, &Okay, how can we help our staff do more of what they were trained to do, and not spend 30% of their day running around fetching things, or doing things that don&t require their license?& That for us is the perfect market [for] collaborative robots.& You&re looking for ways to automate things that the people in the environment don&t need to be doing, so they can do more important stuff. They can do all the clinical care.

In a lot of the hospitals we&re working with, we&re looking at their clinical workflows and identifying places where therea lot of human touch, like nurses making an assessment of the patient. But then the nurse finishes making an assessment [and] has to run and fetch things. Wouldn&t it be better if as soon as that nurseassessment hit the electronic medical record, that triggered a task for the robot to come and bring things? Then the nurse just gets to stay with the patient.

Those are the kind of things we&re looking for: places you could augment the clinical workflow with some automation and increase the amount of time that nurses or physicians are spending with patients.

So your robots, as you said before, do need human supervision. Will they always?

We are working on autonomy. We do want the robots to be doing things autonomously in the environment. But we like to talk about care as a team effort; we&re adding the robot to the team and thereparts of it that the robotdoing and parts of it that the humandoing. There may be places where the robot needs some input or assistance and because itpart of the clinical team. Thathow we like to think about it: if the robot is designed to be a teammate, it wouldn&t be very unusual for the robot to need some help or supervision from a teammate.

That seems different than what you could call Ghost Work.

Right. In most service robots being deployed today, there is this remote supervisor that is either logged in and checking in on the robots, or at least the robots have the ability to phone home if theresome sort of problem.

Thatwhere some of this Ghost Work comes in. People are monitoring and keeping track of robots in the middle of the night. Certainly that may be part of how we deploy our robots as well. But we also think that itperfectly fine for some of that supervision or assistance to come out into the forefront and be part of the face-to-face interaction that the robot has with some of its coworkers.

Since you could potentially envision a scenario in which your robots are monitored from off-site, in a kind of Ghost Work setting, what concerns do you have about the ways in which that work can be kind of anonymized and undercompensated?

Currently we are really interested in our own engineering staff having high-touch customer interaction that we&re really not looking to anonymize. If we had a robot in the field and it was phoning home about some problem that was happening, at our early stage of the company, that is such a valuable interaction that in our company that wouldn&t be anonymous. Maybe the CTO would be the one phoning in and saying, &What happened? I&m so interested.&

I think we&re still at a stage where all of the customer interactions and all of the information we can get from robots in the field are such valuable pieces of information.

But how are you envisioning best-case scenarios for the future? What if your robots really are so helpful that they&re very successful and people want them everywhere? Your CTO is not going to take all those calls. How could you do this in a way that could make your company very successful, but also handle these responsibilities ethically?

- Details

- Category: Technology

Read more: Will the future of work be ethical Founder perspectives

Write comment (92 Comments)In June, TechCrunch Ethicist in Residence Greg M. Epstein attended EmTech Next, a conference organized by the MIT Technology Review. The conference, which took place at MITfamous Media Lab, examined how AI and robotics are changing the future of work.

Gregessay, Will the Future of Work Be Ethical? reflects on his experiences at the conference, which produced what he calls &a religious crisis, despite the fact that I am not just a confirmed atheist but a professional one as well.& In it, Greg explores themes of inequality, inclusion and what it means to work in technology ethically, within a capitalist system and market economy.

Accompanying the story for Extra Crunch are a series of in-depth interviews Greg conducted around the conference, with scholars, journalists, founders and attendees.

Below he speaks to two conference attendees who had crucial insights to share. Meili Gupta is a high school senior at Phillips Exeter Academy, an elite boarding school in New Hampshire; Gupta attended the EmTech Next conference with her mother and has attended with family in previous years as well; her voice and thoughts on privilege and inequality in education and technology are featured prominently in Gregessay. Walter Erike is a 31-year-old independent consultant and SAP Implementation Senior Manager. from Philadelphia. Between conference session, he and Greg talked about diversity and inclusion at tech conferences and beyond.

Meili Gupta is a senior at Phillips Exeter Academy. Image via Meili Gupta

Greg Epstein: How did you come to be at EmTech Next?

Meili Gupta: I am a rising high school senior at Phillips Exeter Academy; I&m one of the managing editors for my schoolscience magazine called Matter Magazine.

I [also] attended the conference last year. My parents have come to these conferences before, and that gave me an opportunity to come. I am particularly interested in the MIT Technology Review because I&ve grown up reading it.

You are the Managing Editor of Matter, a magazine about STEM at your high school. What subjects that Matter covers are most interesting to you?

This year we published two issues. The first featured a lot of interviews from top {AI} professors like Professor Fei-Fei Li, at Stanford. We did a review for her and an interview with Professor Olga Russakovsky at Princeton. That was an AI special issue and, being at this conference you hear about how AI will transform industries.

The second issue coincided with Phillips Exeter Global Climate Action Day. We focused both on environmentalism clubs at Exeter and environmentalism efforts worldwide. I think Matter, as the only stem magazine on campus has a responsibility in doing that.

AI and climate: in a sense, you&ve already dealt with this new field people are calling the ethics of technology. When you hear that term, what comes to mind?

As a consumer of a lot of technology and as someone of the generation who has grown up with a phone in my hand, I&m aware my data is all over the internet. I&ve had conversations [with friends] about personal privacy and if I look around the classroom, most people have covers for the cameras on their computers. This generation is already aware [of] ethics whenever you&re talking about computing and the use of computers.

About AI specifically, as someone whointerested in the field and has been privileged to be able to take courses and do research projects about that, I&m hearing a lot about ethics with algorithms, whether thatfake news or bias or about applying algorithms for social good.

What are your biggest concerns about AI? What do you think needs to be addressed in order for us to feel more comfortable as a society with increased use of AI?

Thatnot an easy answer; itsomething our society is going to be grappling with for years. From what I&ve learned at this conference, from what I&ve read and tried to understand, ita multidimensional solution. You&re going to need computer programmers to learn the technical skills to make their algorithms less biased. You&re going to need companies to hire those people and say, &This is our goal; we want to create an algorithm thatfair and can do good.& You&re going to need the general society to ask for that standard. Thatmy generationjob, too. WikiLeaks, a couple of years ago, sparked the conversation about personal privacy and I think theregoing to be more sparks.

Seems like your high school is doing some interesting work in terms of incorporating both STEM and a deeper, more creative than usual focus on ethics and exploring the meaning of life. How would you say that Exeter in particular is trying to combine these issues?

I&ll give a couple of examples of my experience with that in my time at Exeter, and I&m very privileged to go to a school that has these opportunities and offerings for its students.

Don&t worry, thatin my next question.

Absolutely. With the computer science curriculum, starting in my ninth grade they offered a computer science 590 about [introduction to] artificial intelligence. In the fall another 590 course was about self driving cars, and you saw the intersection between us working in our robotics lab and learning about computer vision algorithms. This past semester, a couple students, and I was involved, helped to set up a 999: an independent course which really dove deep into machine learning algorithms. In the fall, thereanother 590 I&ll be taking called social innovation through software engineering, which is specifically designed for each student to pick a local project and to apply software, coding or AI to a social good project.

I&ve spent 15 years working at Harvard and MIT. I&ve worked around a lot of smart and privileged people and I&ve supported them. I&m going to ask you a question about Exeter and about your experience as a privileged high school student who is getting a great education, but I don&t mean it from a perspective of itnow me versus you.

Of course you&re not.

I&m trying to figure this out for myself as well. We live in a world where we&re becoming more prepared to talk about issues of fairness and justice. Yet by even just providing these extraordinary educational experiences to people like you and me and my students or whomever, we&re preparing some people for that world better than others. How do you feel about being so well prepared for this sort of world to come that it can actually be… I guess my question is, how do you relate to the idea that even the kinds of educational experiences that we&re talking about are themselves deepening the divide between haves and have nots?

I completely agree that the issue between haves and have nots needs to be talked about more, because inequality between the upper and the lower classes is growing every year. This morning, Mr. Isbell from Georgia Tech talk was really inspiring. For example, at Phillips Exeter, we have a social service club called ESA which houses more than 70 different social service clubs. One I&m involved with, junior computer programming, teaches programming to local middle school students. Thatthe type of thing, at an individual level and smaller scale, that people can try to help out those who have not been privileged with opportunities to learn and get ahead with those skills.

What Mr. Isbell was talking about this morning was at a university level and also tying in corporations bridge that divide. I don&t think that the issue itself should necessarily scare us from pushing forward to the frontier to say, the possibility that everybody who does not have a computer science education in five years won&t have a job.

Today we had that debate about role or peoplejobs and robot taxes. Thata very good debate to have, but it sometimes feeds a little bit into the AI hype and I think it may be a disgrace to society to try to pull back technology, which has been shown to have the power to save lives. It can be two transformations that are happening at the same time. One, thattrying to bridge an inequality and is going to come in a lot of different and complicated solutions that happen at multiple levels and the second is allowing for a transformation in technology and AI.

What are you hoping to get out of this conference for yourself, as a student, as a journalist, or as somebody whogoing into the industry?

The theme for this conference is the future of the workforce. I&m a student. That means I&m going to be the future of the workforce. I was hoping to learn some insight about what I may want to study in college. After that, what type of jobs do I want to pursue that are going to exist and be in demand and really interesting, that have an impact on other people? Also, as a student, in particular thatinterested in majoring in computer science and artificial intelligence, I was hoping to learn about possible research projects that I could pursue in the fall with this 590 course.

Right now, I&m working on a research project with a Professor at the University of Maryland about eliminating bias in machine learning algorithms. What type of dataset do I want to apply that project to? Where is the need or the attention for correcting bias in the AI algorithms?

As a journalist, I would like to write a review summarizing what I&ve learned so other [Exeter students] can learn a little too.

What would be your biggest critique of the conference? What could be improved?

- Details

- Category: Technology

Read more: Will the future of work be ethical Future leader perspectives

Write comment (96 Comments)In June, TechCrunch Ethicist in Residence Greg M. Epstein attended EmTech Next, a conference organized by the MIT Technology Review. The conference, which took place at MITfamous Media Lab, examined how AI and robotics are changing the future of work.

Gregessay, Will the Future of Work Be Ethical? reflects on his experiences at the conference, which produced what he calls &a religious crisis, despite the fact that I am not just a confirmed atheist but a professional one as well.& In it, Greg explores themes of inequality, inclusion and what it means to work in technology ethically, within a capitalist system and market economy.

Accompanying the story for Extra Crunch are a series of in-depth interviews Greg conducted around the conference, with scholars, journalists, founders and attendees.

Below he speaks to two key organizers: Gideon Lichfield, the editor in chief of the MIT Technology Review, and Karen Hao, its artificial intelligence reporter. Lichfield led the creative process of choosing speakers and framing panels and discussions at the EmTech Next conference, and both Lichfield and Hao spoke and moderated key discussions.

Gideon Lichfield is the editor in chief at MIT Technology Review. Image via MIT Technology Review

Greg Epstein: I want to first understand how you see your job — what impact are you really looking to have?

Gideon Lichfield: I frame this as an aspiration. Most of the tech journalism, most of the tech media industry that exists, is born in some way of the era just before the dot-com boom. When there was a lot of optimism about technology. And so I saw its role as being to talk about everything that technology makes possible. Sometimes in a very negative sense. More often in a positive sense. You know, all the wonderful ways in which tech will change our lives. So there was a lot of cheerleading in those days.

In more recent years, there has been a lot of backlash, a lot of fear, a lot of dystopia, a lot of all of the ways in which tech is threatening us. The way I&ve formulated the mission for Tech Review would be to say, technology is a human activity. Itnot good or bad inherently. Itwhat we make of it.

The way that we get technology that has fewer toxic effects and more beneficial ones is for the people who build it, use it, and regulate it to make well informed decisions about it, and for them to understand each other better. And I said the role of a tech publication like Tech Review, one that is under a university like MIT, probably uniquely among tech publications, we&re positioned to make that our job. To try to influence those people by informing them better and instigating conversations among them. And thatpart of the reason we do events like this. So that ultimately better decisions get taken and technology has more beneficial effects. So thatlike the high level aspiration. How do we measure that day to day? Thatan ongoing question. But thatthe goal.

Yeah, I mean, I would imagine you measure it qualitatively. In the sense that… What I see when I look at a conference like this is, I see an editorial vision, right? I mean that I&m imagining that you and your staff have a lot of sort of editorial meetings where you set, you know, what are the key themes that we really need to explore. What do we need to inform people about, right?

Yes.

What do you want people to take away from this conference then?

A lot of the people in the audience work at medium and large companies. And they&re thinking about…what effect does automation and AI going to have in their companies? How should it affect their workplace culture? How should it affect their high end decisions? How should it affect their technology investments? And I think the goal for me is, or for us is, that they come away from this conference with a rounded picture of the different factors that can play a role.

There are no clear answers. But they ought to be able to think in an informed and in a nuanced way. If we&re talking about automating some processes, or contracting out more of what we do to a gig work style platform, or different ways we might train people on our workforce or help them adapt to new job opportunities, or if we&re thinking about laying people off versus retraining them. All of the different implications that that has, and all the decisions you can take around that, we want them to think about that in a useful way so that they can take those decisions well.

You&re already speaking, as you said, to a lot of the people who are winning, and who are here getting themselves more educated and therefore more likely to just continue to win. How do you weigh where to push them to fundamentally change the way they do things, versus getting them to incrementally change?

Thatan interesting question. I don&t know that we can push people to fundamentally change. We&re not a labor movement. What we can do is put people from labor movements in front of them and have those people speak to them and say, &Hey, this is the consequences that the decisions you&re taking are having on the people we represent.& Part of the difficulty with this conversation has been that it has been taking place, up till now, mainly among the people who understand the technology and its consequences. Which with was the people building it and then a small group of scholars studying it. Over the last two or three years I&ve gone to conferences like ours and other people&s, where issues of technology ethics are being discussed. Initially it really was only the tech people and the business people who were there. And now you&re starting to see more representation. From labor, from community organizations, from minority groups. But ittaken a while, I think, for the understanding of those issues to percolate and then people in those organizations to take on the cause and say, yeah, this is something we have to care about.

In some ways this is a tech ethics conference. If you labeled it as such, would that dramatically affect the attendance? Would you get fewer of the actual business people to come to a tech ethics conference rather than a conference thatabout tech but that happened to take on ethical issues?

Yeah, because I think they would say itnot for them.

Right.

Business people want to know, what are the risks to me? What are the opportunities for me? What are the things I need to think about to stay ahead of the game? The case we can make is [about the] ethical considerations are part of that calculus. You have to think about what are the risks going to be to you of, you know, getting rid of all your workforce and relying on contract workers. What does that do to those workers and how does that play back in terms of a risk to you?

Yes, you&ve got Mary Gray, Charles Isbell, and others here with serious ethical messages.

What about the idea of giving back versus taking less? There was an L.A. Times op ed recently, by Joseph Menn, about how ittime for tech to give back. It talked about how 20% of Harvard Law grads go into public service after their graduation but if you look at engineering graduates, the percentage is smaller than that. But even going beyond that perspective, Anand Giridharadas, popular author and critic of contemporary capitalism, might say that while we like to talk about &giving back,& what is really important is for big tech to take less. In other words: pay more taxes. Break up their companies so they&re not monopolies. To maybe pay taxes on robots, that sort of thing. Whatyour perspective?

I don&t have a view on either of those things. I think the interesting question is really, what can motivate tech companies, what can motivate anybody whowinning a lot in this economy, to either give back or take less? Itabout what causes people who are benefiting from the current situation to feel they need to also ensure other people are benefiting.

Maybe one way to talk about this is to raise a question I&ve seen you raise: what the hell is tech ethics anyway? I would say there isn&t a tech ethics. Not in the philosophy sense your background is from. There is a movement. There is a set of questions around it, around what should technology companies& responsibility be? And therea movement to try to answer those questions.

A bunch of the technologies that have emerged in the last couple of decades were thought of as being good, as being beneficial. Mainly because they were thought of as being democratizing. And there was this very naïve Western viewpoint that said if we put technology and power in the hands of the people they will necessarily do wise and good things with it. And that will benefit everybody.

And these technologies, including the web, social media, smart phones, you could include digital cameras, you could include consumer genetic testing, all things that put a lot more power in the hands of the people, have turned out to be capable of having toxic effects as well.

That took everybody by surprise. And the reason that has raised a conversation around tech ethics is that it also happens that a lot of those technologies are ones in which the nature of the technology favors the emergence of a dominant player. Because of network effects or because they require lots of data. And so the conversation has been, what is the responsibility of that dominant player to design the technology in such a way that it has fewer of these harmful effects? And that again is partly because the forces that in the past might have constrained those effects, or imposed rules, are not moving fast enough. Itthe tech makers who understand this stuff. Policy makers, and civil society have been slower to catch up to what the effects are. They&re starting to now.

This is what you are seeing now in the election campaign: a lot of the leading candidates have platforms that are about the use of technology and about breaking up big tech. That would have been unthinkable a year or two ago.

So the discussion about tech ethics is essentially saying these companies grew too fast, too quickly. What is their responsibility to slow themselves down before everybody else catches up?

Another piece that interests me is how sometimes the &giving back,& the generosity of big tech companies or tech billionaires, or whatever it is, can end up being a smokescreen. A way to ultimately persuade people not to regulate. Not to take their own power back as a people. Is there a level of tech generosity that is actually harmful in that sense?

I suppose. It depends on the context. If all thathappening is corporate social responsibility drives that involve dropping money into different places, but there isn&t any consideration of the consequences of the technology itself those companies are building and their other actions, then sure, ita problem. But italso hard to say giving billions of dollars to a particular cause is bad, unless what is happening is that then the government is shirking its responsibility to fund those causes because itcoming out of the private sector. I can certainly see the U.S. being particularly susceptible to this dynamic, where government sheds responsibility. But I don&t think we&re necessarily there yet.

- Details

- Category: Technology

Read more: Will the future of work be ethical Perspectives from MIT Technology Review

Write comment (97 Comments)In June, TechCrunch Ethicist in Residence Greg M. Epstein attended EmTech Next, a conference organized by the MIT Technology Review. The conference, which took place at MITfamous Media Lab, examined how AI and robotics are changing the future of work.

Gregessay, Will the Future of Work Be Ethical? reflects on his experiences at the conference, which produced what he calls &a religious crisis, despite the fact that I am not just a confirmed atheist but a professional one as well.& In it, Greg explores themes of inequality, inclusion and what it means to work in technology ethically, within a capitalist system and market economy.

Accompanying the story for Extra Crunch are a series of in-depth interviews Greg conducted around the conference, with scholars, journalists, founders and attendees.

Below, Greg speaks to two academics who were key EmTech Next speakers. First is David Autor, one of the worldmost prominent economists. Autorlecture, Work of the Past, Work of the Future, originally delivered as the prestigious 2019 Richard T. Ely Lecture at the Annual Meeting of the American Economic Association, formed the basis for the opening presentation at the EmTech Next conference.

Susan Winterberg, an academic who studies business and ethics, was a panelist who brought important, even stirring insights about the devastating impact automation can have on communities and how companies can succeed by protecting people against those effects.

David Autor is the Ford Professor of Economics at MIT. Image via MIT Technology Review

Greg Epstein: Who do you see as the audience for your work — is it more labor or management, and how do different audiences engage with it differently?

David Autor: My primary audience, it can be argued, is other scholars. But I am aware of and pleased that my work has reached beyond that narrow group. I&m aware that it is discussed by policymakers and others and I&m sort of driven by trying to understand what is changing, who is affected, what are the opportunities, what are the challenges.

Could you help me to get a sense to the best of your knowledge of some of the key ways your ideas about the &Work of the Past, [and the] Work of the Future& of work& have been discussed by corporations or sort of those in management and business ownership roles and also by labor organizations or unions, etc.?

I met twice with President Obama. I have spoken with many people in senior governmental policy positions. I&ve spoken to a lot of private sector audiences as well, including private sector research corporations like McKinsey and so on.

I have spoken with labor people. Labor folks were initially quite hostile to my work. I got a huge amount of pushback and have throughout my career, for example, from EPI, the Economic Policy Institute, which is sort of a union shop in DC. The guy who was their chief economist for a long time, Larry Mishel, started attacking my first papers before they were even published, and itnever stopped.

But I mean increasingly, and I find that super irritating.

In the last couple of years, I think therebeen a lot more receptivity to this discussion from many sides. I&m increasingly of the view that organized labor needs to have a more constructive role, that itbecome too marginalized. There might have been a time in the U.S. when it was too powerful, but now ittoo powerless.

I think organized labor accepted that the worldchanged in ways that are not just because of mean bosses and politicians, but there are underlying economic forces that impact the work people do. So they find the work illuminating.

What is your relationship with some of the more socialistic folks on the Democratic side?

I&m not into that. I believe in the value of the market system. I believe that it has a lot of rough edges, but I don&t think therea better system available that I know of. I&m very sympathetic towards market economies like Sweden and Norway and so on. I think the U.S. should move more in that direction. But those are all just variants of market economies. And actually, I think even whatmost often called socialism in the U.S. is actually, just really asking for a different variant of the market system.

Thatwhy I&m curious: if somebody from the camp of Alexandria Ocasio-Cortez or Bernie Sanders or even Elizabeth Warren were to call your office and say, all right, we want your perspective on how right are we getting it, or where would you advise us to course correct in our economic message? What would you say?

I met with Elizabeth Warren. I think some of what she has to say is great and some of it is dumb. I&m strongly in favor of more or better antitrust regulation, more consumer protections, more transparency, of the government doing more of certain things and getting the private sector out of it.

I think her idea, on the other hand, of paying off everyonestudent loans is a terrible idea, just a huge transfer to the affluent. So you know, sheon the spectrum. Shenot calling for the overthrow of the state. Shejust calling for another variant of a market economy.

I take very strong issue, for example, with Bernie Sanders and his condemnation of charter schools, which I think shows how totally out of touch he is, that he doesn&t realize how much good charter schools have done for poor minority inner-city kids. Heprobably never met one. And so his white liberal teacherunion view of, you know, charter schools are harming the public school system is just utterly, utterly misguided.

Are you familiar with a guy named Nick Hanauer? Do you consider yourself to be in his camp in some way?

I don&t know if I&m in his camp. I think hereally concerned the level of inequality is unsustainable, and I&m concerned about that too. But again, other market economies don&t have the same level of inequality as the United States. But you could have a lot less inequality and then you&d be Germany or you could have still less and then you&d be Sweden. Right? But you&d still be a market economy.

So just to be clear. I mean, I consider myself a progressive. And before I came to MIT, before I even went to grad school I spent several years working in nonprofits doing skills education for the poor. A lot of my work has been driven by that.

What kind of skills education were you doing?

I did computer skills training for the poor at a black Methodist church in San Francisco for three years, and then I did related work as a volunteer in South Africa.

- Details

- Category: Technology

Read more: Will the future of work be ethical Academic perspectives

Write comment (97 Comments)Page 236 of 5614

18

18