Technology

Note: This story has actually been updated to suggest that the offer deserves tens of millions not numerous millions and includes remark from other makers. It looks like all is fair in love and scooter wars. In the battle royal to become the last dock-less scooter startup standing (and un-besmirched by poop), Bird has actually tattooed what it is characterizing as exclusive deals with Ninebot (the parent company of Segway) and Xiaomi (yes, that Xiaomi), for rights to their supply of scooters for ride-sharing in the U.S. Ninebot and Xiaomi are the current champions in the scooter production market, and securing their supply may cut off a huge source of hardware for competitors Spin and LimeBike, both of which used Ninebot and Xiaomi for scooters. & Thatnews to us, we have an agreement with both, & wrote an executive at a leading scooter company, when inquired about the offer and its implications for the scooter service. An individual knowledgeable about the Bird transaction positioned the deal in the tens of countless dollars and declined to speculate on what the contract with the two supplier could suggest for its competitors. Since its launch in Santa Monica, Calif. in September 2017, Bird has actually ended up being associated with both the perils and guarantee that scooters hold for last mile movement. While they certainly make taking a trip across campuses or in relatively small communities much more hassle-free than automobile services or shuttles, they & re also blocking pathways, parks, streets, and even beaches, while producing untold varieties of small check outs to emergency rooms in the cities they & ve broadened into. And Bird has broadened into a great deal of cities. The company is presently operating in San Diego, Los Angeles, San Francisco, Austin, Washington, DC, Nashville and Atlanta. Other business in the market believe that this agreement is much less than it appears. They note that the exclusivity agreement is for a particular model of scooters, however each scooter company has its own design and those contracts are still legitimate. Certainly, e-mails from providers seen by TechCrunch verify that one of the providers pointed out in the Bird release still means to satisfy scheduled orders from a direct Bird rival. Sources near to Bird says that the Lime and Spin contracts must be void, given the contracts that the Santa Monica, Calif. had actually signed at first signed (and after that modified) with its large Chinese providers. San Franciscoadministrators are fighting back versus the scooter companies storming the sidewalks by instituting a brand-new permitting process. The city prepares to limit the variety of scooters in the city to 1,250 and will need companies to register with the MTA.

- Details

- Category: Technology

Read more: Update: Bird purchases more scooters

Write comment (93 Comments)Today brings historic firsts for both SpaceX and Bangladesh: the former is sending up the final, highly updated revision of its Falcon 9 rocket for the first time, and the latter is launching its first satellite. Ita preview of the democratized space economy to come this century.

Update: Success! The Falcon 9 first stage, after delivering the second stage to the border of space, has successfully landed on the drone ship Of Course I Still Love You, and Bangabandhu has been delivered to its target orbit.

You can watch the launch below:

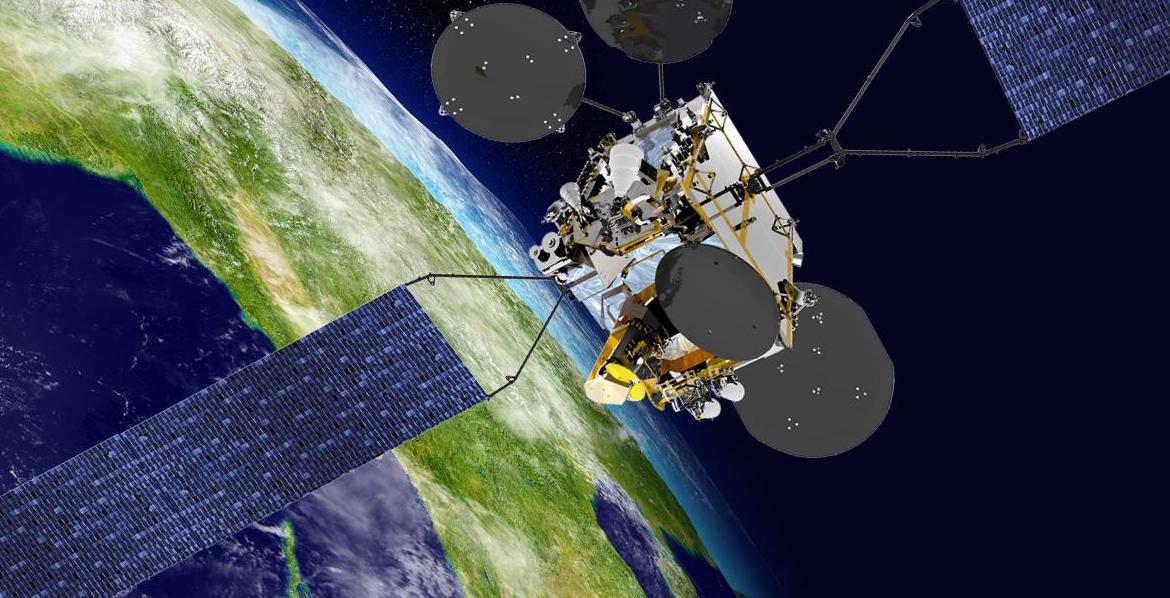

Although Bangabandhu-1 is definitely important, especially to the nation launching it, it is not necessarily in itself a highly notable satellite. Itto be a geostationary communications hub that serves the whole country and region with standard C-band and Ku-band connectivity for all kinds of purposes.

Currently the country spends some $14 million per year renting satellite time from other countries, something they determined to stop doing as a matter of national pride and independence.

&A sovereign country, in a pursuit of sustainable development, needs its own satellite in order to reduce its dependency on other nations,& reads the project description at the countryTelecommunications Regulation Commission, which has been pursuing the idea for nearly a decade.

&A sovereign country, in a pursuit of sustainable development, needs its own satellite in order to reduce its dependency on other nations,& reads the project description at the countryTelecommunications Regulation Commission, which has been pursuing the idea for nearly a decade.

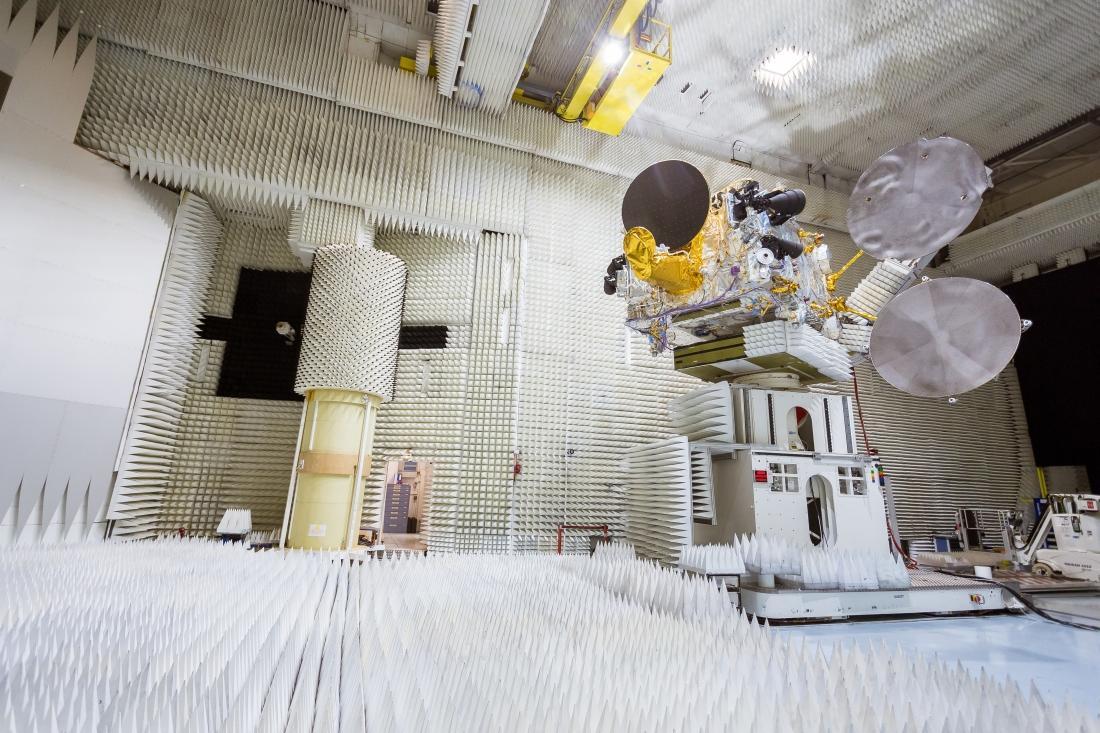

It contracted with Thales Alenia Space to produce and test the satellite, which cost about $250 million and is expected to last at least 15 years. In addition to letting the country avoid paying satellite rent, it could generate revenue by selling its services to private companies and nearby nations.

Bangabandhu-1 in a Thales test chamber.

&This satellite, which carries the symbolic name of the father of the nation, Bangabandhu, is a major step forward for telecommunications in Bangladesh, and a fantastic driver of economic development and heightened recognition across Asia,& said the companyCEO, Jean-Loïc Galle, in a recent blog post about the project.

Bangabandhu-1 will be launching atop a SpaceX Falcon 9 rocket, but this one is different from all the others that have flown in the past. Designed with crewed missions in mind, it could be thought of as the production version of the rocket, endowed with all the refinements of years of real-world tests.

Most often referred to as Block 5, this is (supposedly) the final revision of the Falcon 9 hardware, safer and more reusable than previous versions. The goal is for a Block 5 first stage to launch a hundred times before being retired, far more than the handful of times existing Falcon 9s have been reused.

There are lots of improvements over the previous rockets, though many are small or highly technical in nature. The most important, however, are easy to enumerate.

The engines themselves have been improved and strengthened to allow not only greater thrust (reportedly about a 7-8 percent improvement) but improved control and efficiency, especially during landing. They also have a new dedicated heat shield for descent. They&re rated to fly 10 times without being substantially refurbished, but are also bolted on rather than welded, further reducing turnaround time.

The legs on which the rocket lands are also fully retractable, meaning they don&t have to be removed before transport. If you want to launch the same rocket within days, every minute counts.

Instead of white paint, the first stage will have a thermal coating (also white) that helps keep it relatively cool during descent.

To further reduce heat damage, the rocket&grid fins,& the waffle-iron-like flaps that pop out to control its descent, are now made of a single piece of titanium. They won&t catch fire or melt during reentry like the previous aluminum ones sometimes did, and as such are now permanently attached features of the rocket.

(SpaceX founder Elon Musk is particularly proud of these fins, which flew on the Falcon Heavy side boosters; in the briefing afterwards, he said: &I&m actually glad we got the side boosters back, because they had the titanium fins. If I had to pick something to get back, it&d be those.&)

Lastly (for our purposes anyway) the fuel tank has been reinforced out of concerns some had about the loading of supercooled fuel while the payload — soon to be humans, if all goes well — is attached to the rocket. This system failed before, causing a catastrophic explosion in 2016, but the fault has been addressed and the reinforcement should help further mitigate risk. (The emergency abort rockets should also keep astronauts safe should something go wrong during launch.)

The changes, though they contribute directly to reuse and cost reductions, are also aimed at satisfying the requirements of NASAcommercial crew missions. SpaceX is in competition to provide both launch and crew capsule services for missions to the ISS, scheduled for as early as late 2018. The company needs to launch the Block 5 version of Falcon 9 (not necessarily the same exact rocket) at least 7 times before any astronauts can climb aboard.

- Details

- Category: Technology

Read more: First launch of SpaceX’s revamped Falcon 9 carries Bangladesh’s space ambitions

Write comment (91 Comments)After 26 years, Boston Dynamics is finally getting ready to start selling some robots. Founder Marc Raibert says that the companydog-like SpotMini robot is in pre-production and preparing for commercial availability in 2019. The announcement came onstage at TechCrunchTC Sessions: Robotics event today at UC Berkeley.

&The SpotMini robot is one that was motivated by thinking about what could go in an office — in a space more accessible for business applications — and then, the home eventually,&Raibert said onstage.

Boston Dynamics& SpotMiniwas introduced late last year and took the design of the company&bigger brother& quadruped Spot. While the company has often showcased advanced demos of its emerging projects, SpotMini has seemed uniquely productized from the start.

On its website, Boston Dynamics highlights that SpotMini is the &quietest robot [they] have built.& The device weighs around 66 pounds and can operate for about 90 minutes on a charge.

The company says it has plans with contract manufacturers to build the first 100 SpotMinis later this year for commercial purposes, with them starting to scale production with the goal of selling SpotMini in 2019. They&re not ready to talk about a price tag yet, but they detailed that the latest SpotMini prototype cost 10 times less to build than the iteration before it.

Just yesterday, Boston Dynamics posted a video of SpotMini in autonomous mode navigating with the curiosity of a flesh-and-blood animal.

[gallery ids="1638152,1638150,1638161,1638151"]The company, perhaps best known for gravely frightening conspiracy theorists and AI doomsdayerswith advanced robotics demos, has had quite the interesting history.

It was founded in 1992 after being spun out of MIT. After a stint inside Alphabet Corp., the company was purchased by SoftBank last year. SoftBank has staked significant investments in the robotics space through its Vision Fund, and, in 2015, the company began selling Pepper, a humanoid robot far less sophisticated than what Boston Dynamics has been working on.

You can watch the entire presentation below, which includes a demonstration of the latest iteration of the SpotMini.

- Details

- Category: Technology

Read more: Area Mini has gotten here #TCRobotics pic.twitter.com/IiugHH2U5X —-- TechCrunch...

Write comment (95 Comments)The visual data sets of images and videos amassed by the most powerful tech companies have been a competitive advantage, a moat that keeps the advances of machine learning out of reach from many. This advantage will be overturned by the advent of synthetic data.

The worldmost valuable technology companies, such as Google, Facebook, Amazon and Baidu, among others, are applying computer vision and artificial intelligence to train their computers. They harvest immense visual data sets of images, videos and other visual data from their consumers.

These data sets have been a competitive advantage for major tech companies, keeping out of reach from many the advances of machine learning and the processes that allow computers and algorithms to learn faster.

Now, this advantage is being disrupted by the ability for anyone to create and leverage synthetic data to train computers across many use cases, including retail, robotics, autonomous vehicles, commerce and much more.

Synthetic data is computer-generated data that mimics real data; in other words, data that is created by a computer, not a human. Software algorithms can be designed to create realistic simulated, or &synthetic,& data.

This synthetic data then assists in teaching a computer how to react to certain situations or criteria, replacing real-world-captured training data. One of the most important aspects of real or synthetic data is to have accurate labels so computers can translate visual data to have meaning.

Since 2012, we at LDV Capital have been investing in deep technical teams that leverage computer vision, machine learning and artificial intelligence to analyze visual data across any business sector, such as healthcare, robotics, logistics, mapping, transportation, manufacturing and much more. Many startups we encounter have the &cold start& problem of not having enough quality labelled data to train their computer algorithms. A system cannot draw any inferences for users or items about which it hasn&t yet gathered sufficient information.

Startups can gather their own contextually relevant data or partner with others to gather relevant data, such as retailers for data of human shopping behaviors or hospitals for medical data. Many early-stage startups are solving their cold start problem by creating data simulators to generate contextually relevant data with quality labels in order to train their algorithms.

Big tech companies do not have the same challenge gathering data, and they exponentially expand their initiatives to gather more unique and contextually relevant data.

Cornell Tech professor Serge Belongie, who has been doing research in computer vision for more than 25 years, says,

In the past, our field of computer vision cast a wary eye on the use of synthetic data, since it was too fake in appearance. Despite the obvious benefits of getting perfect ground truth annotations for free, our worry was that we&d train a system that worked great in simulation but would fail miserably in the wild. Now the game has changed: the simulation-to-reality gap is rapidly disappearing. At the very minimum, we can pre-train very deep convolutional neural networks on near-photorealistic imagery and fine tune it on carefully selected real imagery.

AiFi is an early-stage startup building a computer vision and artificial intelligence platform to deliver a more efficient checkout-free solution to both mom-and-pop convenience stores and major retailers. They are building a checkout-free store solution similar to Amazon Go.

Amazon.com Inc. employees shop at the Amazon Go store in Seattle. ©Amazon Go; Photographer: Mike Kane/Bloomberg via Getty Images

As a startup, AiFi had the typical cold start challenge with a lack of visual data from real-world situations to start training their computers, versus Amazon, which likely gathered real-life data to train its algorithms while Amazon Go was in stealth mode.

Avatars help train AiFi shopping algorithms. ©AiFI

AiFisolution of creating synthetic data has also become one of their defensible and differentiated technology advantages. Through AiFisystem, shoppers will be able to come into a retail store and pick up items without having to use cash, a card or scan barcodes.

These smart systems will need to continuously track hundreds or thousands of shoppers in a store and recognize or &re-identify& them throughout a complete shopping session.

AiFi store simulation with synthetic data. ©AiFi

Ying Zheng, co-founder and chief science officer at AiFi, previously worked at Apple and Google. She says,

The world is vast, and can hardly be described by a small sample of real images and labels. Not to mention that acquiring high-quality labels is both time-consuming and expensive, and sometimes infeasible. With synthetic data, we can fully capture a small but relevant aspect of the world in perfect detail. In our case, we create large-scale store simulations and render high-quality images with pixel-perfect labels, and use them to successfully train our deep learning models. This enables AiFi to create superior checkout-free solutions at massive scale.

Robotics is another sector leveraging synthetic data to train robots for various activities in factories, warehouses and across society.

Josh Tobin is a research scientist at OpenAI, a nonprofit artificial intelligence research company that aims to promote and develop friendly AI in such a way as to benefit humanity as a whole. Tobin is part of a team working on building robots that learn. They have trained entirely with simulated data and deployed on a physical robot, which, amazingly, can now learn a new task after seeing an action done once.

They developed and deployed a new algorithm called one-shot imitation learning, allowing a human to communicate how to do a new task by performing it in virtual reality. Given a single demonstration, the robot is able to solve the same task from an arbitrary starting point and then continue the task.

©Open AI

Their goal was to learn behaviors in simulation and then transfer these learnings to the real world. The hypothesis was to see if a robot can do precise things just as well from simulated data. They started with 100 percent simulated data and thought that it would not work as well as using real data to train computers. However, the simulated data for training robotic tasks worked much better than they expected.

Tobin says,

Creating an accurate synthetic data simulator is really hard. There is a factor of 3-10x in accuracy between a well-trained model on synthetic data versus real-world data. There is still a gap. For a lot of tasks the performance works well, but for extreme precision it will not fly — yet.

Many large technology companies, auto manufacturers and startups are racing toward delivering the autonomous vehicle revolution. Developers have realized there aren&t enough hours in a day to gather enough real data of driven miles needed to teach cars how to drive themselves.

One solution that some are using is synthetic data from video games such as Grand Theft Auto; unfortunately, some say that the gameparent company Rockstar is not happy about driverless cars learning from their game.

A street in GTA V (left) and its reconstruction through capture data (right). ©Intel Labs,Technische Universität Darmstadt

May Mobility is a startup building a self-driving microtransit service. Their CEO and founder, Edwin Olson, says,

One of our uses of synthetic data is in evaluating the performance and safety of our systems. However, we don&t believe that any reasonable amount of testing (real or simulated) is sufficient to demonstrate the safety of an autonomous vehicle. Functional safety plays an important role.

The flexibility and versatility of simulation make it especially valuable and much safer to train and test autonomous vehicles in these highly variable conditions. Simulated data can also be more easily labeled as it is created by computers, therefore saving a lot of time.

Jan Erik Solem is the CEO and co-founder of Mapillary*, helping create better maps for smarter cities, geospatial services and automotive. According to Solem,

Having a database and an understanding of what places look like all over the world will be an increasingly important component for simulation engines. As the accuracy of the trained algorithms improves, the level of detail and diversity of the data used to power the simulation matters more and more.

Neuromation is building a distributed synthetic data platform for deep learning applications. Their CEO, Yashar Behzadi says,

To date, the major platform companies have leveraged data moats to maintain their competitive advantage. Synthetic data is a major disruptor, as it significantly reduces the cost and speed of development, allowing small, agile teams to compete and win.

The challenge and opportunity for startups competing against incumbents with inherent data advantage is to leverage the best visual data with correct labels to train computers accurately for diverse use cases. Simulating data will level the playing field between large technology companies and startups. Over time, large companies will probably also create synthetic data to augment their real data, and one day this may tilt the playing field again. Many speakers at the annual LDV Vision Summit in May in NYC will enlighten us as to how they are using simulated data to train algorithms to solve business problems and help computers get closer to general artificial intelligence.

*Mapillary is an LDV Capital portfolio company.

- Details

- Category: Technology

Google Clips& AI-powered &smart camera& just got even smarter, Google announced today, revealing improved functionality around Clips& ability to automatically capture specific moments — like hugs and kisses. Or jumps and dance moves. You know, in case you want to document all your special, private moments in a totally non-creepy way.

I kid, I kid!

Well, not entirely. Let me explain.

Look, Google Clips comes across to me as more of a proof-of-concept device that showcases the power of artificial intelligence as applied to the world of photography rather than a breakthrough consumer device.

I&m the target market for this camera — a parent and a pet owner (and look how cute she is) — but I don&t at all have a desire for a smart camera designed to capture those tough-to-photograph moments, even though neither my kid nor my pet will sit still for pictures.

I&ve tried to articulate this feeling, and I find ithard to say why I don&t want this thing, exactly. Itnot because the photos are automatically uploaded to the cloud or made public — they are not. They are saved to the camera16 GB of onboard storage and can be reviewed later with your phone, where you can then choose to keep them, share them or delete them. And itnot even entirely because of the price point — though, arguably, even with the recent $50 discount itquite the expensive toy at $199.

Maybe itjust the camerapremise.

That in order for us to fully enjoy a moment, we have to capture it. And because some moments are so difficult to capture, we spend too much time with phone-in-hand, instead of actually living our lives — like playing with our kids or throwing the ball for the dog, for example. And that the only solution to this problem is more technology. Not just putting the damn phone down.

What also irks me is the broader idea behind Clips that all our precious moments have to be photographed or saved as videos. They do not. Some are meant to be ephemeral. Some are meant to be memories. In aggregate, our hearts and minds tally up all these little life moments — a hug, a kiss, a smile — and then turn them into feelings. Bonds. Love. Itokay to miss capturing every single one.

I&m telling you, itokay.

At the end of the day, there are only a few times I would have even considered using this product — when baby was taking her first steps, and I was worried it would happen while my phone was away. Or maybe some big event, like a birthday party, where I wanted candids but had too much going on to take photos. But even in these moments, I&d rather prop my phone up and turn on a &Google Clips& camera mode, rather than shell out hundreds for a dedicated device.

Just saying.

You may feel differently. Thatcool. To each their own.

Anyway, what I think is most interesting about Clips is the actual technology. That it can view things captured through a camera lens and determine the interesting bits — and that italready getting better at this, only months after its release. That we&re teaching AI to understand whatactually interesting to us humans, with our subjective opinions. That sort of technology has all kinds of practical applications beyond a physical camera that takes spy shots of Fido.

The improved functionality is rolling out to Clips with the May update, and will soon be followed by support for family pairing, which will let multiple family members connect the camera to their device to view content.

Herean intro to Clips, if you missed it the first time. (See below)

Note that itcurrently on sale for $199. Yeah, already. Hmmm.

- Details

- Category: Technology

NBA Eastern Conference Finals - when and where

The first game in a best of seven series between the Cleveland Cavaliers and the Boston Celtics will take place on Sunday May 13th at TD Garden in Boston. The game starts at 3:30pm ET (That’s at 12:30pm PT on the West Coast and at 8:30pm BST in the UK) and there will be six more games throughout the m

- Details

- Category: Technology

Read more: How to watch the Celtics vs Cavs: live stream the 2018 NBA Eastern Conference Finals live

Write comment (90 Comments)Page 5443 of 5614

11

11